Hyperscale & Cloud Data Center

Challenges of Hyperscale / Cloud Data center

- High risk of downtime

- Very high density load requirement

- High compute with huge variation in the IT loads

- No clarity on future load expected

- Unexpected customer load requirements

- New waves of end-user requirements

- Data center needs to cater all loads from low – medium – high densities application

- Under utilization of ACE parameters, Availability, Capacity & Efficiency

- Raising energy bills & Opex cost

- Need to maintain the SLA & cost

Create Virtual Facility using 6Sigma CFD software

A successful cloud business will need to ensure that every component, starting from the processor and ending at the data center itself, is “purpose-built”. From a physical infrastructure perspective, this means designing across the entire supply chain starting all the way from the Application Specific Integration Circuits (ASICs), servers, racks and eventually the data centers from a power and cooling perspective.

But how? The best answer lies with a powerful modeling tool – our industry-leading Virtual Facility (VF). Designed from the ground up to be used for the design of the smallest components in the data center, right through to the data center itself, it is our view that the VF has the unmatched predictive accuracy necessary to safeguard your business from the uncertainty of data center performance.

Managing Risk in DC Design and Operations

The biggest risks during design are to over engineer and to overspend on CapEx (capital expenditure). By contrast, a poorly designed facility can lead to overspending in OpEx (operational expenditure) due to upgrades to the power/cooling infrastructure

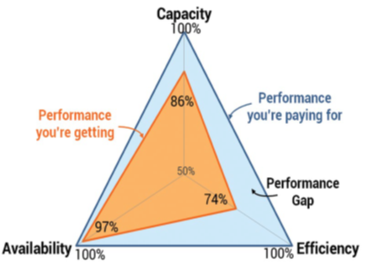

Successful data center design is all about winning the battle against change. To do that, you must understand the three variables that spell the difference between success and failure: availability (uptime), physical capacity and cooling efficiency (ACE).

To maximize ACE, it’s important to predict the risk before implementing any change. The VF is the leading industry tool for quantifying and visualizing the ACE trinity, its causes and effects, and the risk it poses to your data center operations.

Successful data center design is all about winning the battle against change. To do that, you must understand the three variables that spell the difference between success and failure: availability (uptime), physical capacity and cooling efficiency (ACE).

To maximize ACE, it’s important to predict the risk before implementing any change. The VF is the leading industry tool for quantifying and visualizing the ACE trinity, its causes and effects, and the risk it poses to your data center operations.

Managing Risk in Server and Rack level

The powerful tools of the Virtual Facility allow you to model IT equipment by leveraging existing CAD files and utilizing a large number of intelligent parts, such as components, heat sinks, fans, PCBs, thermoelectric coolers, heat exchangers and heat pipes, when necessary.

The advantage of all the data center components being built on the same architecture in the VF is clear: you can evaluate the performance of the server in the rack that will eventually be deployed in the data center. This is truly starting with the “end in mind”, and removes a great deal of future risk from the design process

The advantage of all the data center components being built on the same architecture in the VF is clear: you can evaluate the performance of the server in the rack that will eventually be deployed in the data center. This is truly starting with the “end in mind”, and removes a great deal of future risk from the design process